Improving style-tuned models

Models tuned on specific styles often produce results that do not match the styles well. We argue that this is because of a discrepancy between training (contains a signal leak whose distribution differs from unit/standard multivariate Gaussian) and inference (no signal leak). We fix this discrepancy by modelling the signal leak present during training and including a signal leak at inference time too.

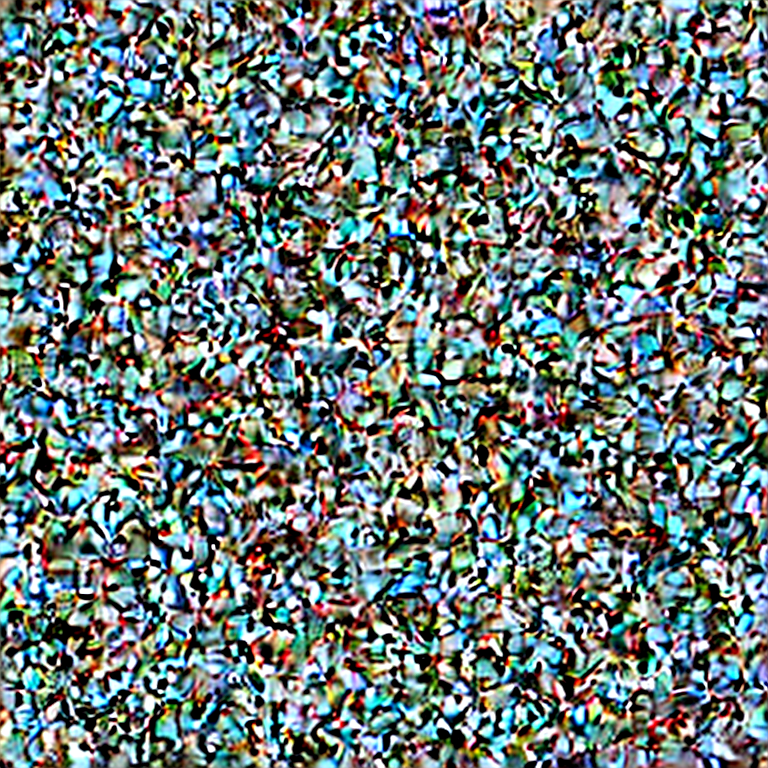

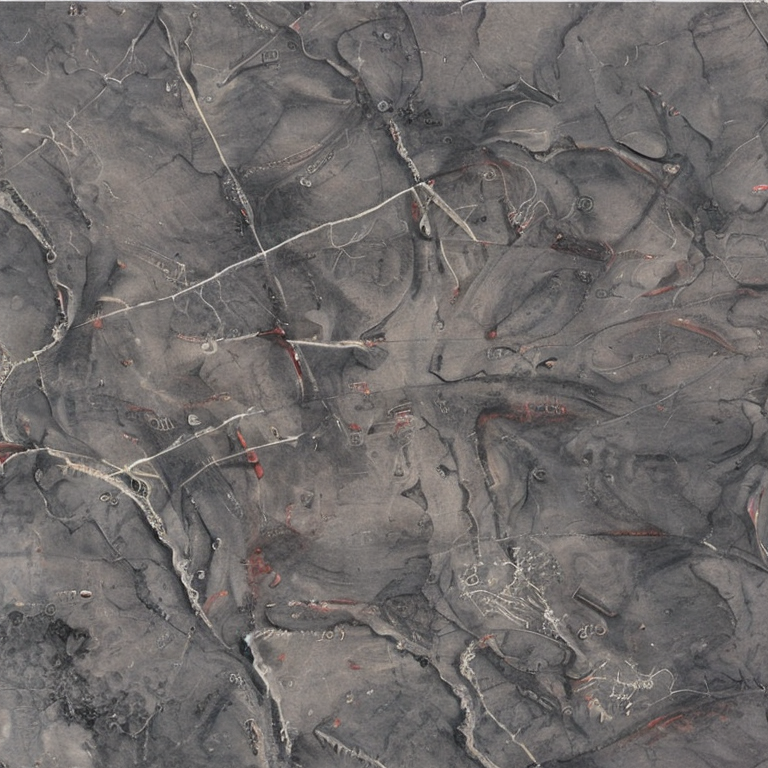

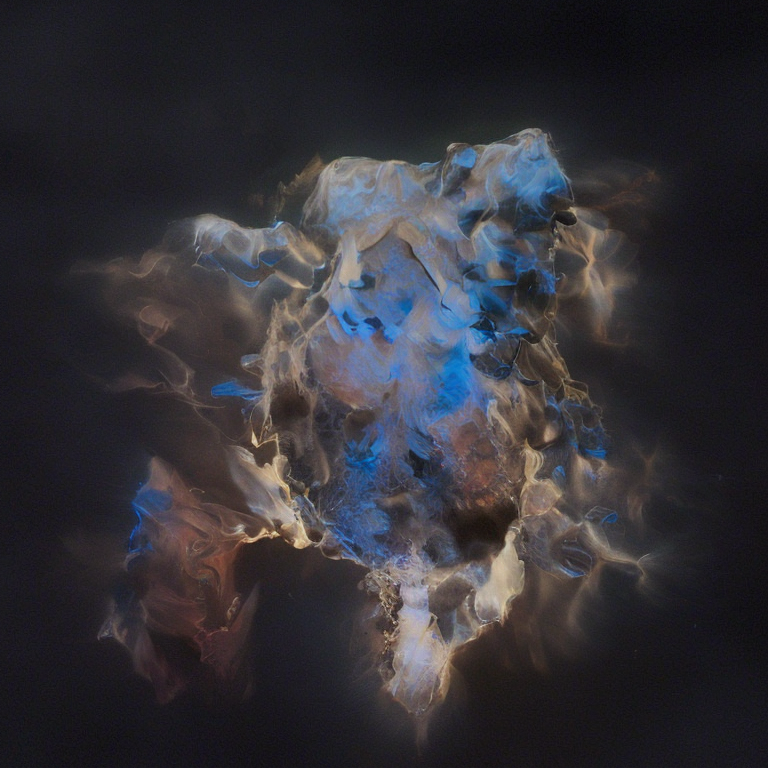

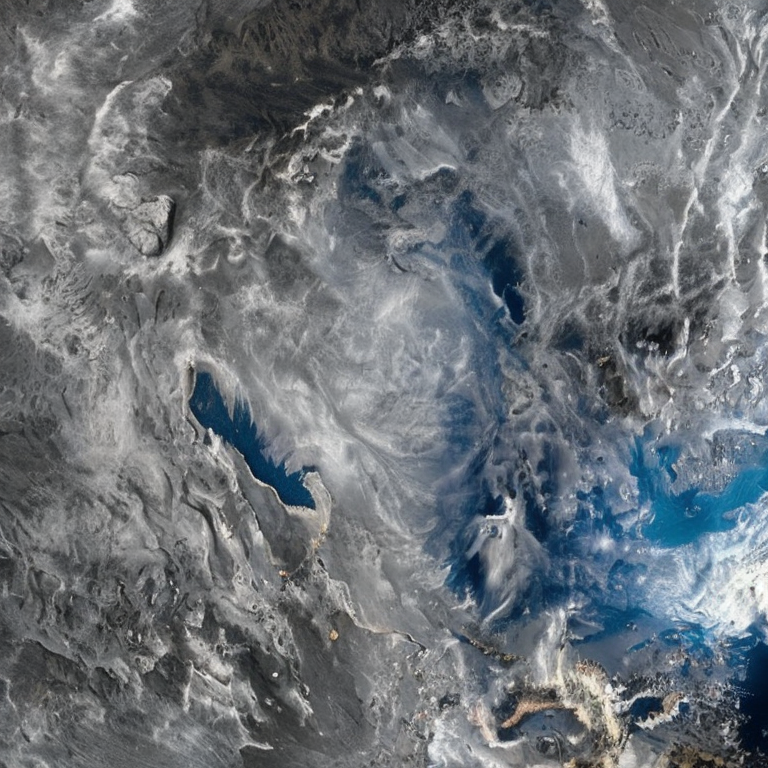

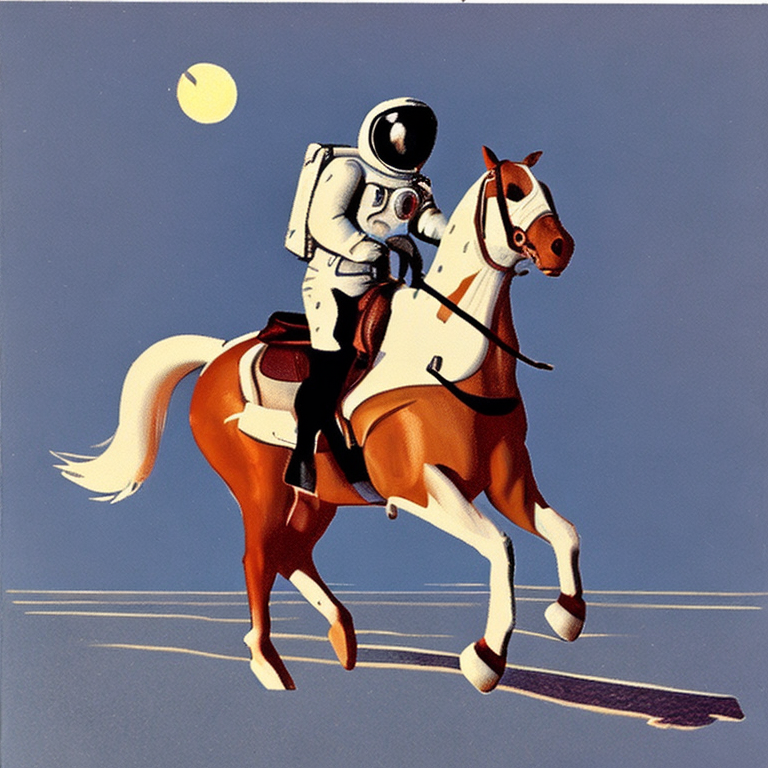

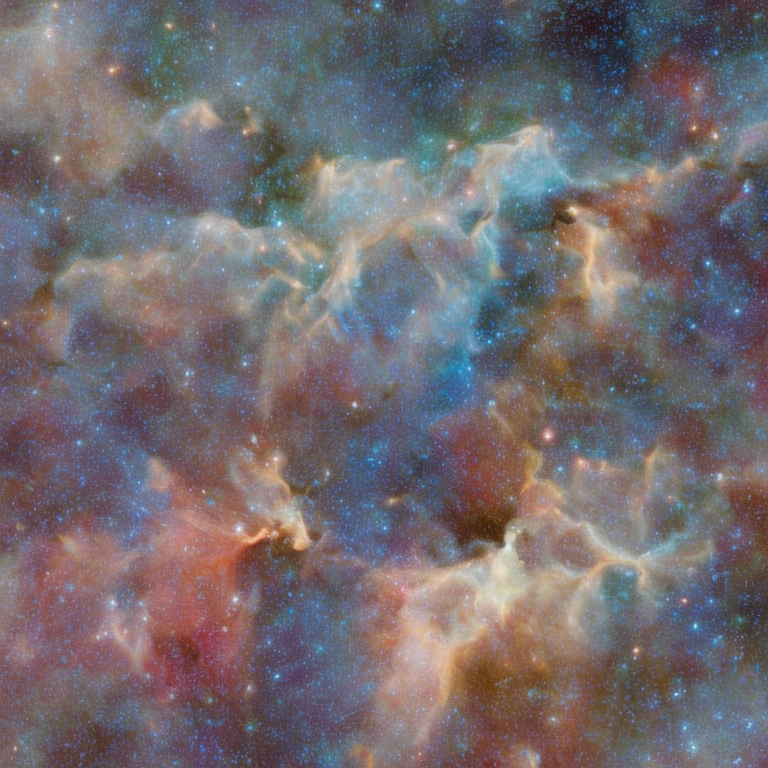

Model: sd-dreambooth-library/nasa-space-v2-768, with guidance_scale = 1

Prompt: "A very dark picture of the sky, Nasa style"

| Initial latents | Generated image (original) | + Signal Leak | Generated image (ours) |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

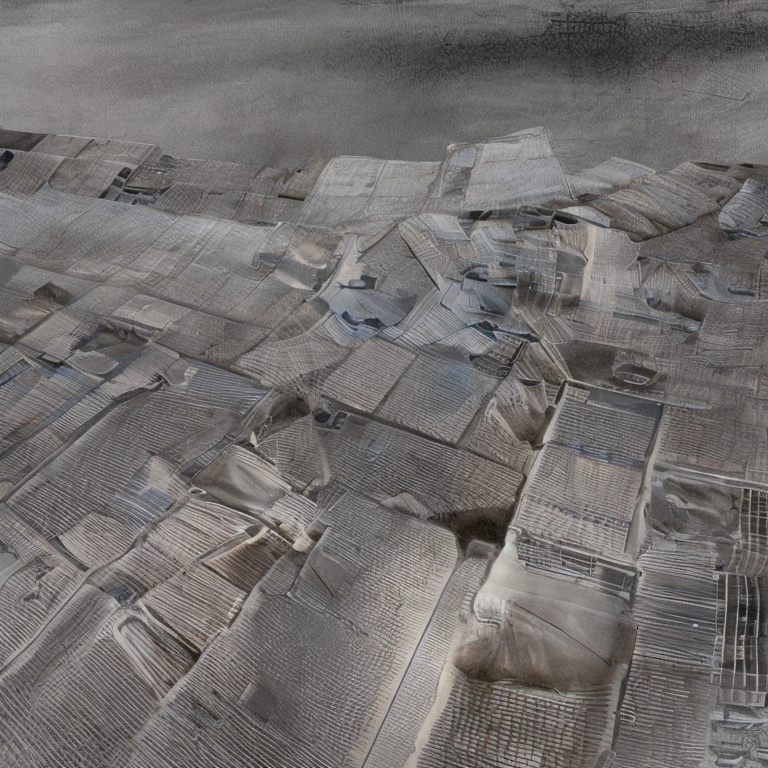

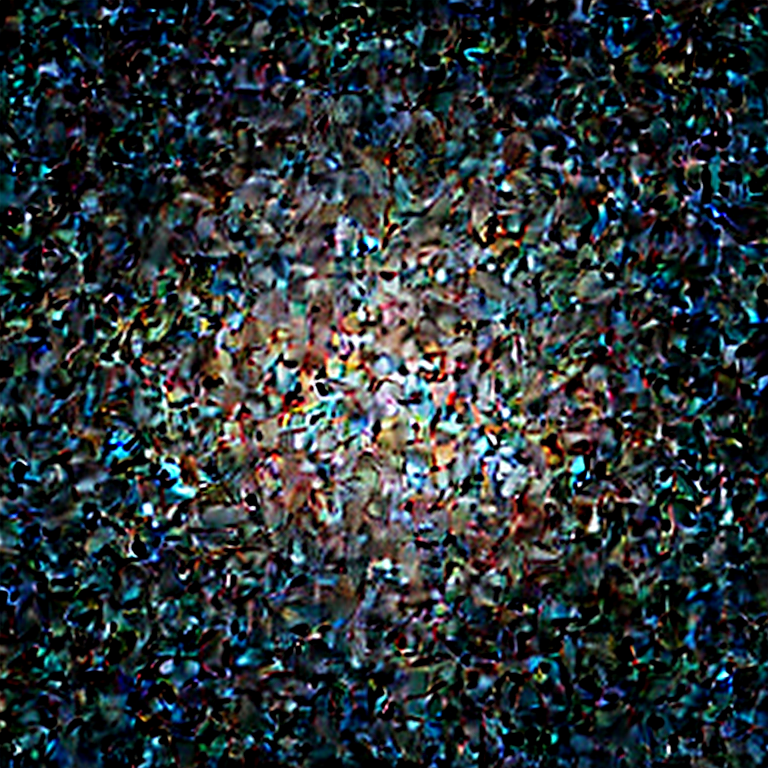

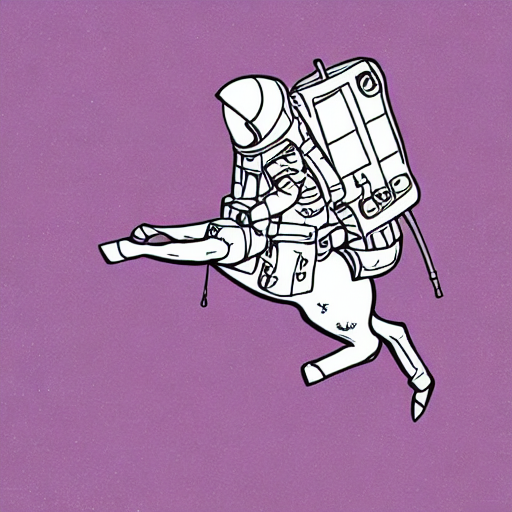

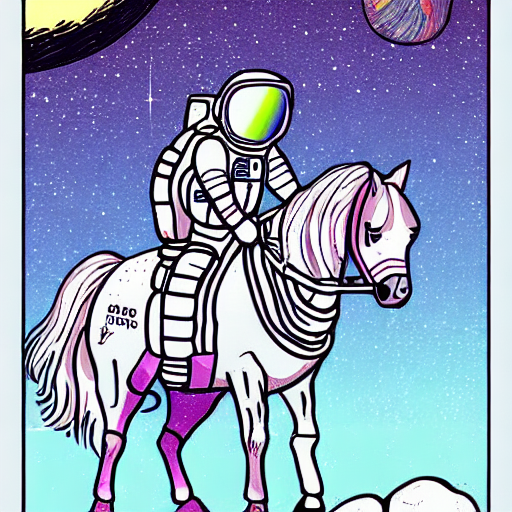

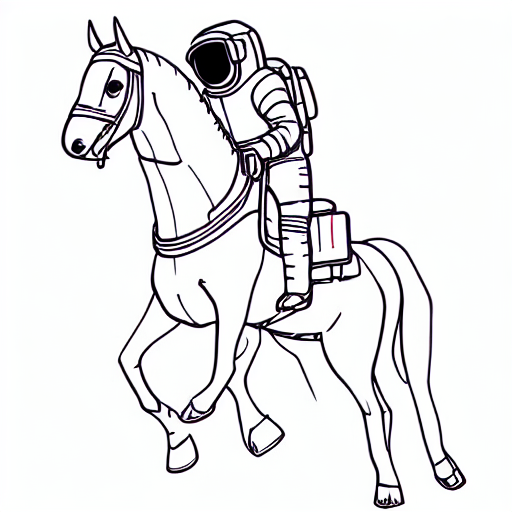

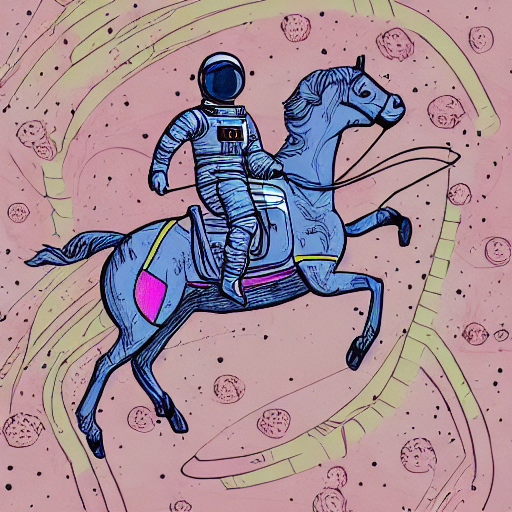

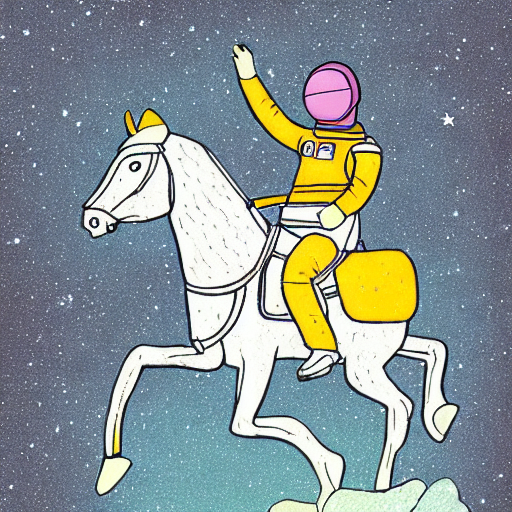

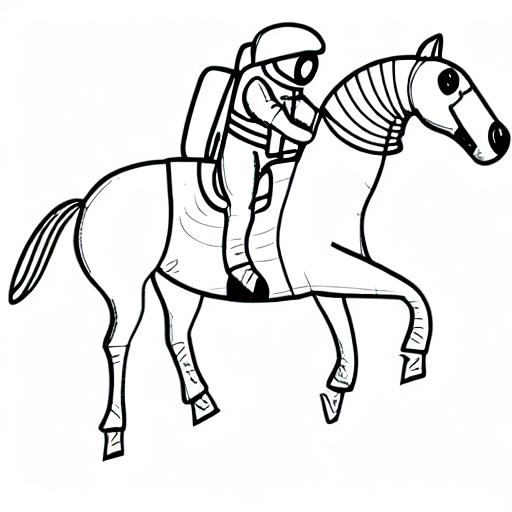

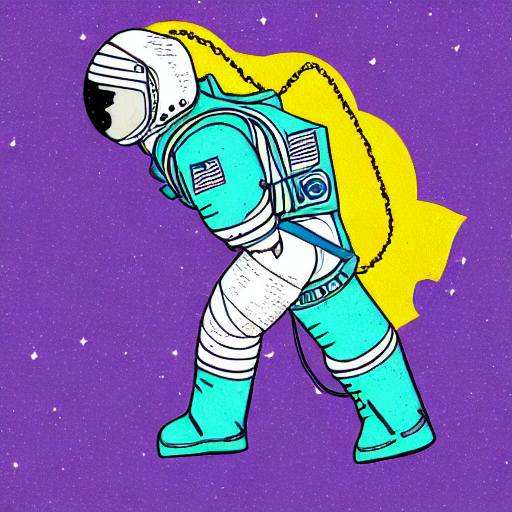

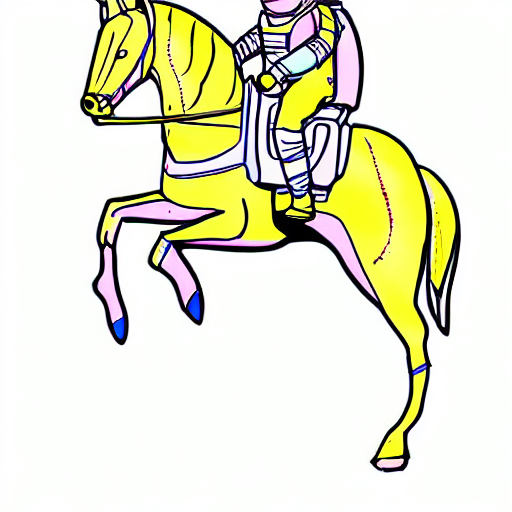

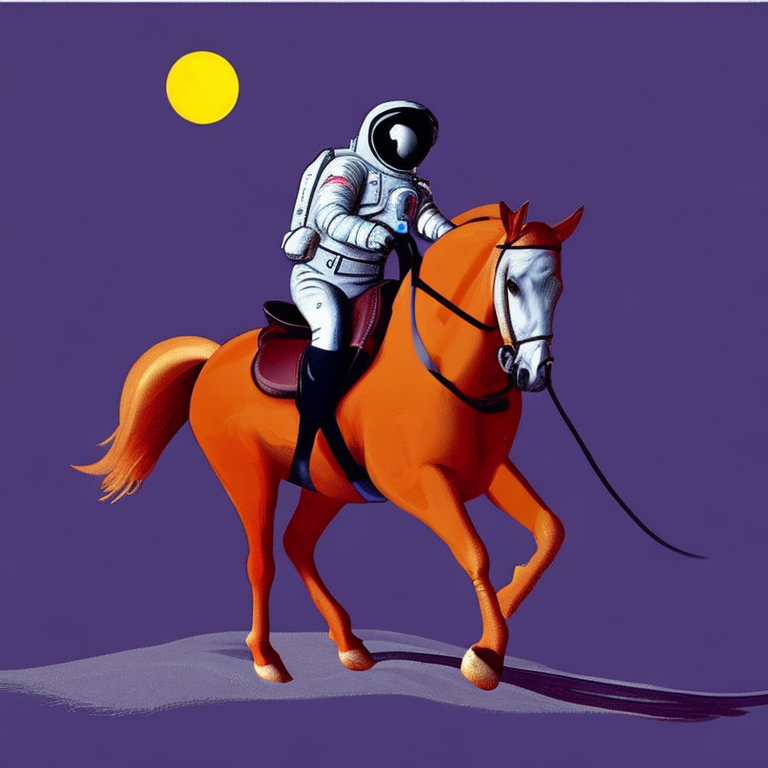

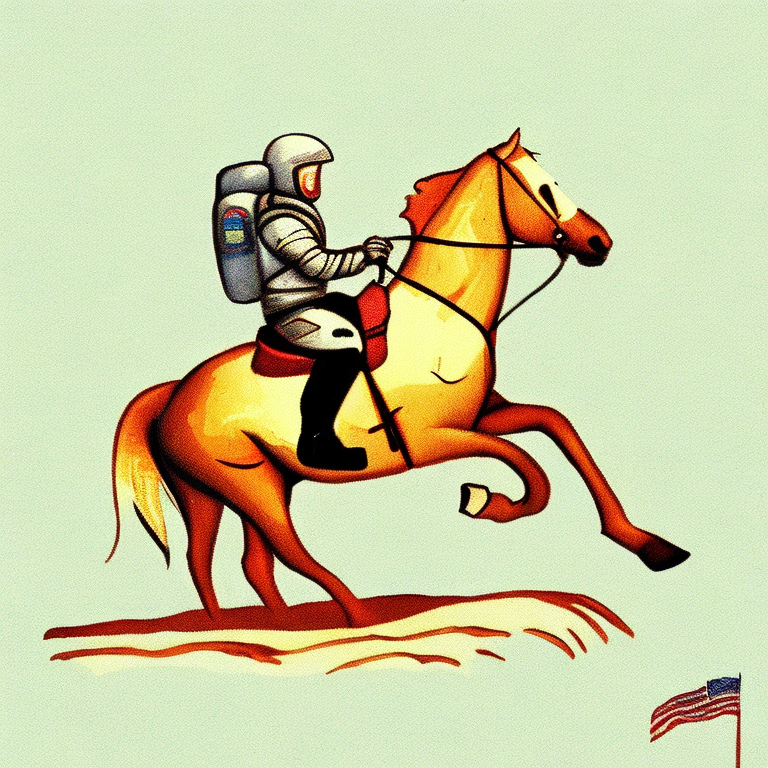

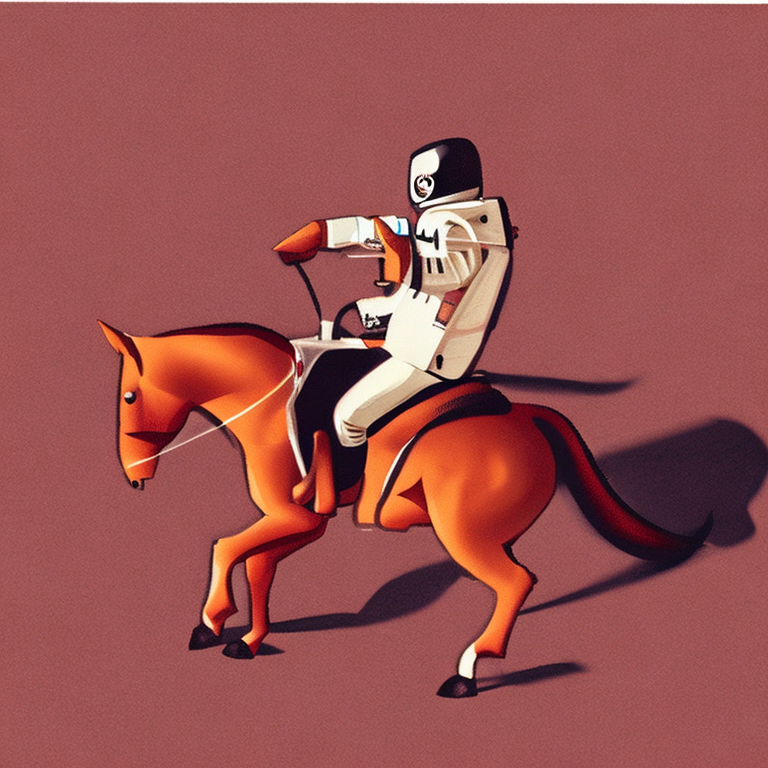

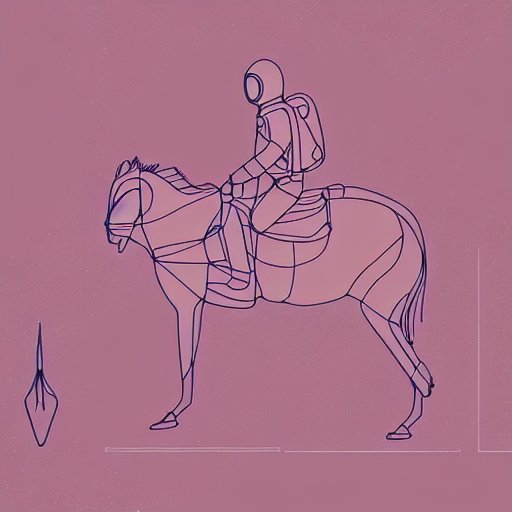

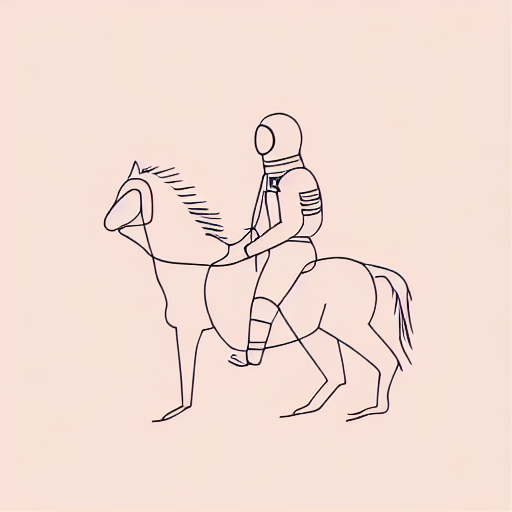

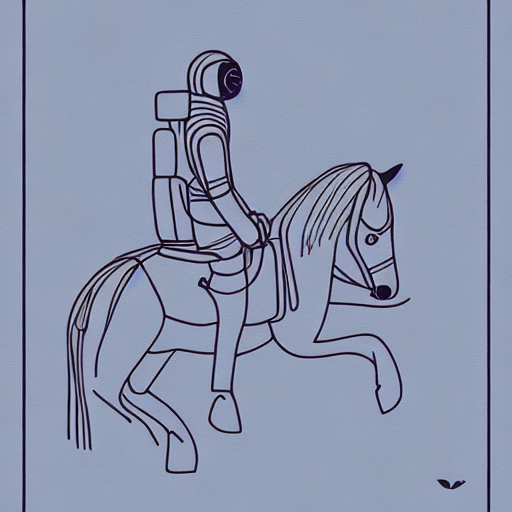

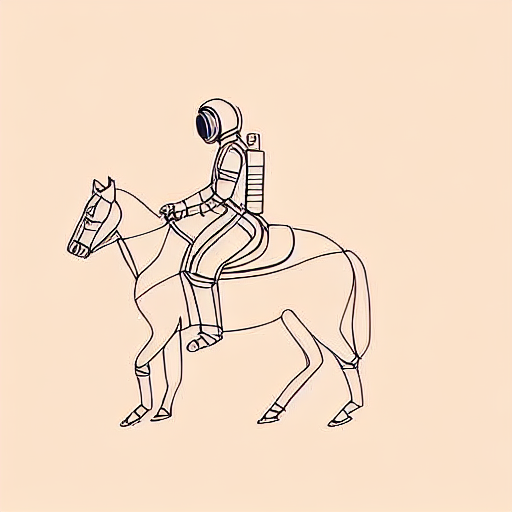

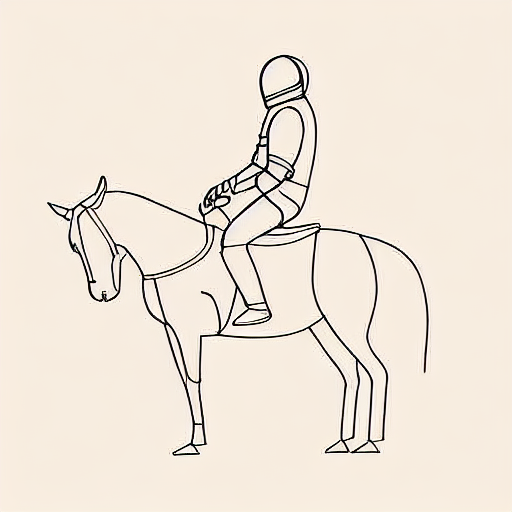

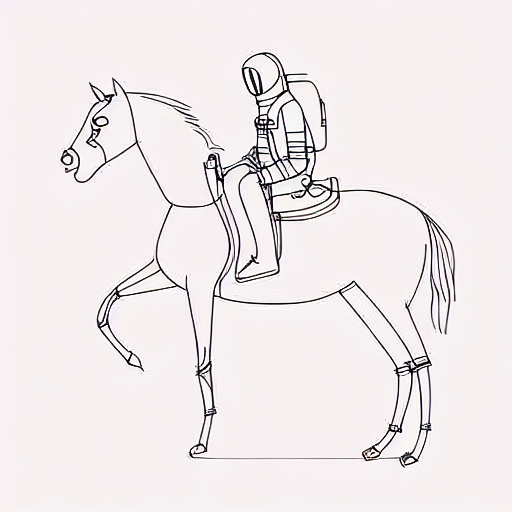

Model: CompVis/stable-diffusion-v1-4 + sd-concepts-library/line-art

Prompt: "An astronaut riding a horse in the style of

<line-art>"

| Initial latents | Generated image (original) | + Signal Leak | Generated image (ours) |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|